NLQxform — From DBLP-QUAD Challenge to an Interactive Scholarly QA System

Competition entry → workshop paper → research assistant implementation → SIGIR 2025 demo.

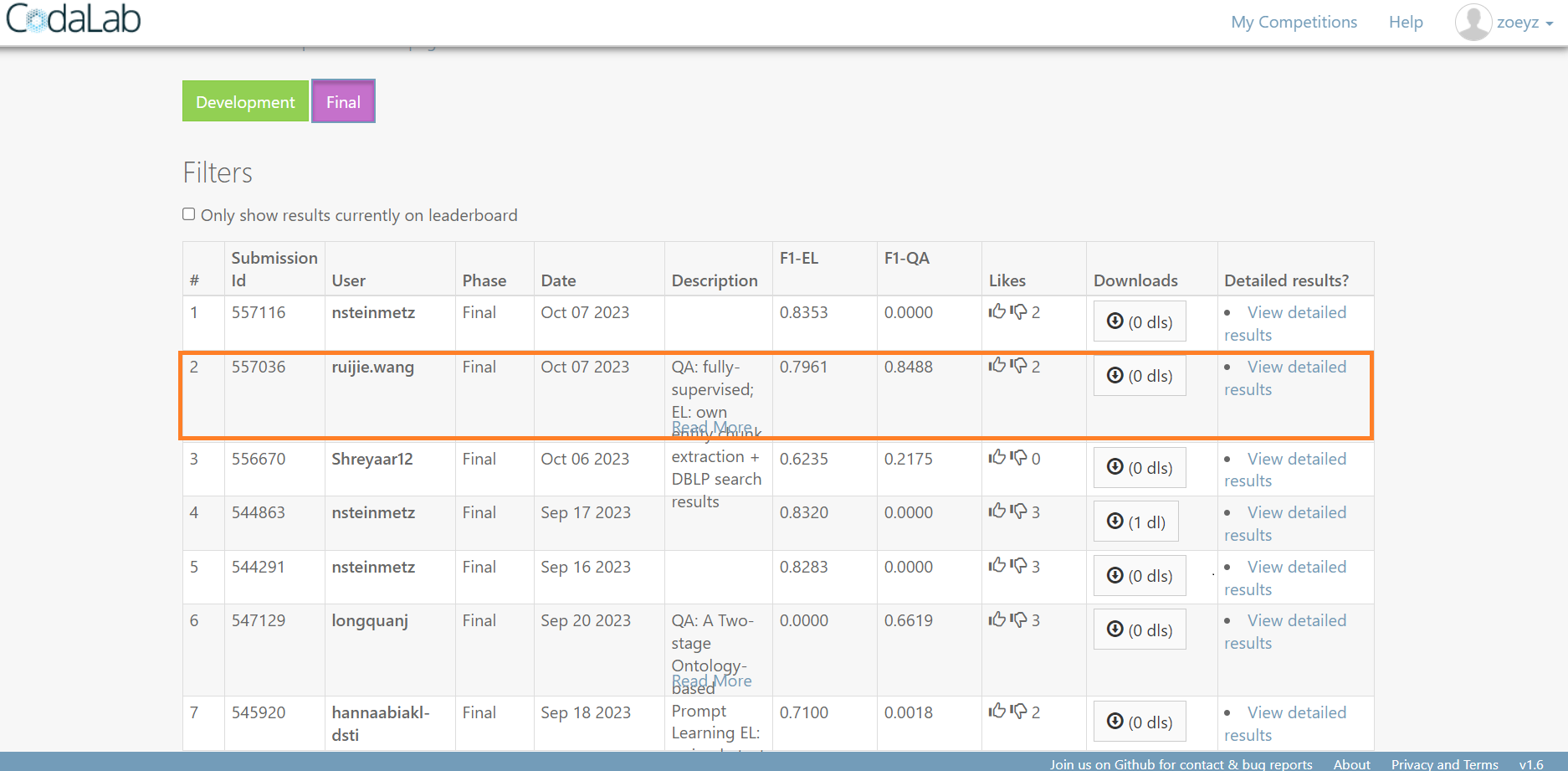

In Sep 2023 I participated in the Scholarly QALD challenge at ISWC 2023 (DBLP-QUAD: Knowledge Graph Question Answering over DBLP), building on approaches developed during my Master's thesis. The challenge entry (model-based text→SPARQL transformer) produced top performance on the competition leaderboard (1st in the QA task; 2nd in entity linking) and a workshop paper(NLQxform).

During my Research Assistant period, I extended the system into NLQxform-UI, an interactive scholarly QA tool that exposes the translation pipeline and enables user-guided correction. The UI presents multiple beam candidates from the generator so users can inspect, select, and edit candidate queries before execution — supporting transparent, human-in-the-loop refinement of model outputs. This work culminated in a SIGIR 2025 demo submission.

My role & contributions

- Adapted thesis methods to DBLP-QUAD: dataset preprocessing, entity/relation normalization and template analysis.

- Designed and fine-tuned BART-based text→SPARQL generators.

- Implemented an evaluation and iterative refinement workflow for identifying and addressing model errors.

- As Research Assistant, extended the pipeline into a web UI (NLQxform-UI): front-end implementation, back-end integration exposing stepwise translation and execution, and demo packaging for SIGIR.

Approach (high-level)

Pipeline highlights:

- Preprocessing & KG linking: entity linking and relation normalization tuned for DBLP schema.

- Query generation: BART fine-tuned for text→SPARQL with post-processing for complex aggregations; generator produces multiple beam candidates for downstream inspection.

- UI & interaction: human-in-the-loop workflow where users can inspect candidate queries, choose or edit a beam, and execute the final query to obtain results.

Results (competition)

Public leaderboard snapshot and my private submission (for provenance).

A workshop paper was written and accepted as a follow-up of this excellent result.

Public submissions on the leaderboard (link: CodaLab — public submissions).

Example: my private submission (used for internal evaluation / reproducibility checks).

Artifacts & links

- Workshop paper (CEUR 2023): NLQxform: A Language Model-based Question to SPARQL Transformer.

- SIGIR 2025 (demo): NLQxform-UI: An Interactive and Intuitive Scholarly Question Answering System

- Code: NLQxform | NLQxform-UI.

- Challenge: DBLP-QUAD / Scholarly QALD (CodaLab).