The aim of this project is to build an intelligent

conversational agent. Since the datasets are all from the movie

domain, this can also be considered as a movie domain based

conversational agent. Based on the datasets given by the

teaching team, the agent should be able to answer five types of

questions, i.e. Factual Questions, Embedding-based Questions,

Multimedia Questions, Recommendation Questions and Crowdsourcing

Questions. Overall, the agent I built is able to answer these

types of questions very well. Based on this, I have also made

some extensions, such as my agent being able to answer

multi-entity questions, give answers from different sources, and

query SPARQL statements online and get returns.

In this project, the web interface for interacting with humans

is realised by the Speakeasy web-based infrastructure provided

by the teaching team. Students only need to complete the

back-end part and call the corresponding API.

Examples

Here are back-end outputs from some examples during testing.

Factual Question & Embedding-based Question

Question: Who directed the Bridge on the River Kwai

Sentence

Type: questions

Intent: {'intent': 'director', 'uri':

rdflib.term.URIRef('http://www.wikidata.org/prop/direct/P57')}

Entities:

[{'entity': 'The Bridge on the River Kwai', 'uri':

rdflib.term.URIRef('http://www.wikidata.org/entity/Q188718')}]

no

answer in crowd data

answer from graph

answer from

embeddings

From graph: The director of The Bridge on the

River Kwai: David Lean. -----------AND------------From

embeddings: The director of The Bridge on the River Kwai: Jack

Hawkins | David Lean | Harold Goodwin | William Holden | Michael

Wilson.

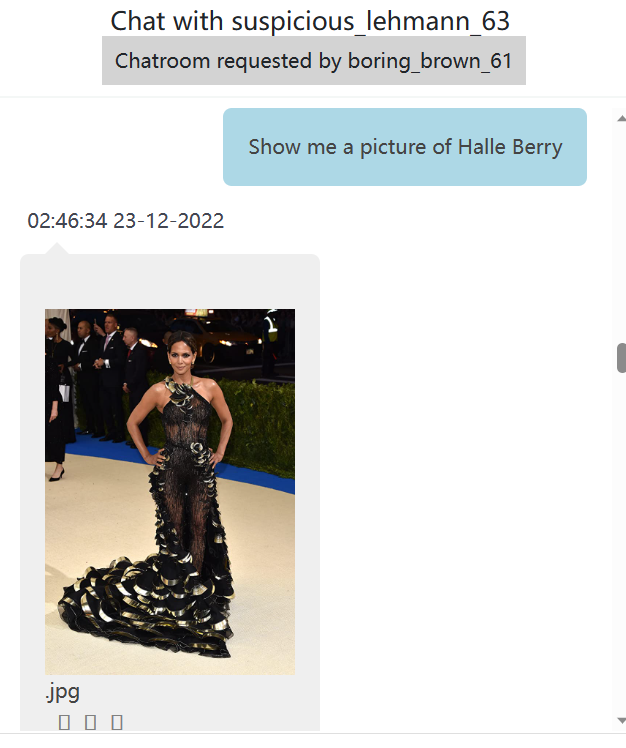

Multimedia Question

Question: Show me a picture of Halle Berry

Sentence Type:

questions

Intent: {'intent': 'PICTURE', 'uri': None}

Entities:

[{'entity': 'Halle Berry', 'uri':

rdflib.term.URIRef('http://www.wikidata.org/entity/Q1033016')}]

Image

imdb ids: nm0000932

image:0353/rm3257480192.jpg

Recommendation Question

Question: Given that I like The Lion King, and The Beauty and

Beast, can you recommend some movies?

Sentence Type:

questions

Intent: {'intent': 'RECOMMEND', 'uri': None}

Entities:

[{'entity': 'The Lion King', 'uri':

rdflib.term.URIRef('http://www.wikidata.org/entity/Q134138')},

{'entity': 'Beauty and the Beast', 'uri':

rdflib.term.URIRef('http://www.wikidata.org/entity/Q19946102')}]

My

recommendations are:Beauty and the Beast final poster, Hidden

Figures, Cinderella, Maleficent, Aladdin

Crowdsourcing Question & Online Query

Question: Can you tell me the publication date of Tom Meets

Zizou?

Sentence Type: questions

Intent: {'intent':

'publication date', 'uri':

rdflib.term.URIRef('http://www.wikidata.org/prop/direct/P577')}

Entities:

[{'entity': 'Tom Meets Zizou', 'uri':

rdflib.term.URIRef('http://www.wikidata.org/entity/Q1410031')}]

answer

from crowd data

answer searched online

From crowd

data: My answer is: 2011-01-01 [Crowd, inter-rater agreement

0.040, The answer distribution for this specific task was 0

support votes and 3 reject votes].

-----------AND------------From online data: The publication date

of Tom Meets Zizou: 2011-01-01T00:00:00Z.

Multi-entity Question

Question: Who is the director of Good Will Hunting and the

Bridge on the River Kwai?

Sentence Type: questions

Intent:

{'intent': 'director', 'uri':

rdflib.term.URIRef('http://www.wikidata.org/prop/direct/P57')}

Entities:

[{'entity': 'Good Will Hunting', 'uri':

rdflib.term.URIRef('http://www.wikidata.org/entity/Q193835')},

{'entity': 'The Bridge on the River Kwai', 'uri':

rdflib.term.URIRef('http://www.wikidata.org/entity/Q188718')}]

no

answer in crowd data

answer from graph

answer from

embeddings

time:2.365527629852295

response:From graph:

The director of Good Will Hunting: Gus Van Sant. & The

director of The Bridge on the River Kwai: David Lean.

-----------AND------------From embeddings: The director of Good

Will Hunting: Harmony Korine | Ben Affleck | Gus Van Sant | Matt

Damon | Vik Sahay. & The director of The Bridge on the

River Kwai: Jack Hawkins | David Lean | Harold Goodwin | William

Holden | Michael Wilson.

Good Judgment of Sentence Types

Question: hi there

Sentence Type: greetings

Intent:

None

Entities: None

time:5.5789947509765625e-05

response:Hi!

Question:

Hi there, I'd like to know the picture of batman

Sentence

Type: questions

Intent: {'intent': 'PICTURE', 'uri':

None}

Entities: [{'entity': 'Here', 'uri':

rdflib.term.URIRef('http://www.wikidata.org/entity/Q12124760')},

{'entity': 'Batman', 'uri':

rdflib.term.URIRef('http://www.wikidata.org/entity/Q2695156')},

{'entity': 'Ike', 'uri':

rdflib.term.URIRef('http://www.wikidata.org/entity/Q66481379')}]

Image

imdb ids: tt1127886 ch0000177

any picture.....

time:14.352812051773071

response:image:0527/rm4168713472.jpg

Fuzzy Recognition of Intent and Entity

Question: what is the description of Going with the Wind

Sentence

Type: questions

Intent: {'intent': 'node description',

'uri': rdflib.term.URIRef('http://schema.org/description')}

Entities:

[{'entity': 'Gone with the Wind', 'uri':

rdflib.term.URIRef('http://www.wikidata.org/entity/Q2870')}]

no

answer in crowd data

answer from graph

no answer in

embeddings

time:12.450563192367554

response:From

graph: My answer is: 1936 novel by Margaret Mitchell.

Below is an example screenshot of the front-end when interacting through the Speakeasy API: